Shadow Bans Only Fool Humans, Not Bots

Platforms claim shadow bans defeat bots, but that's wrong: only captchas fool bots.

Human ingenuity comes from "the most unlikely places," according to Charles Koch in his latest book, Believe in People: Bottom-Up Solutions For A Top-Down World (2020). Koch argues that solutions to "society's most pressing problems" do not come from top-down sources like wealth, fame or royalty. Rather, the answers come from the bottom up, from those nearest and most impacted by the problems.

And that is why social media platforms' widespread use of shadow banning–the practice of showing users their removed commentary as if it is not removed–is so perplexing. How did we come to adopt a practice that is the antithesis of believing in bottom-up solutions? Every day, platforms remove or demote millions of comments, and quite often, those comments' authors have no idea any action was taken because the system hides those actions.

Platforms have long held that shadow banning is necessary to deal with spam, but shadow bans do not fool bots. Shockingly, it turns out that when platforms talk about "spam," they're referring to content written by you and me, genuine users. But they have not been upfront about this definition.

All comment removals on Reddit have always, by default, been shadow bans.

In 2015 Steve Huffman, Reddit's CEO, returned to lead the platform he co-founded. He submitted an "Ask Me Anything" post to Reddit, where one user asked:

Huffman, who goes by the handle spez, initially responded:

Despite Huffman’s justifications, shadow bans are just a surreptitious attempt to moderate content. And, unfortunately, according to journalist Cory Doctorow, "there is no security in obscurity." In other words, relying on secrecy only benefits rogue actors who make it their profession to discover secrets.

As a programmer and web developer myself, I reject the argument that shadow bans are useful against spam bots. If mere secrecy fooled bots, then captchas would play no role in authenticating humans. Further, it would be trivial for a programmer like me to build a bot that checks the status of its own content; in less than a second, a bot can retrieve a web page from the perspective of another user and discover if its posts have been moderated or banned.

What's more, once bot-authors discover how shadow bans work, they can reproduce content faster than humans. Thus, when platforms use secretive moderation techniques, bots gain an advantage while humans are elbowed out.

Genuine users may sometimes go years without discovering they have been shadow banned by a platform. When users do discover that some content they have been posting has been invisible to all other users, they say:

But relatively few users discover their shadow bans. Therefore, a shadow ban is more like a captcha that defeats humans.

Reddit inspired Facebook's use of shadow bans.

Imagine living under a government with a secret code of conduct. If you are not close with the authorities, you will not know what behavior is acceptable. Maybe you aren't able to open a bank account and the banks give you spurious reasons for that, or you can't travel or register to vote.

Citizens would object to such obvious inequalities imposed by the government, and they also expect transparency when interacting with each other. For instance, I have the right to remove an offensive person from my own home, but choosing to instead shame them behind their back is awkward, ineffectual, and likely to cause more problems down the road. As a result, society discourages secretive shaming.

Mr. Huffman claimed real users on Reddit should never be shadow banned, yet his platform is shadow banning tons of content daily. A few weeks after Huffman's post, another Reddit staffer contradicted the CEO by stating that shadow bans were still used against real people:

But that is not Reddit's only kind of shadow ban. Currently, whenever a volunteer moderator removes a comment, Reddit shows its author the comment as if no action has been taken against it. So when Huffman said "real users should never be shadowbanned," he was glossing over the fact that all comment removals on Reddit have always, by default, been shadow bans. Huffman never clarified that either he or his staff misspoke, despite users pointing out the hypocrisy. The false notion that shadow bans help combat spam has continued to propagate ever since. Virtually every other platform has adopted secretive content moderation as their standard policy.

Years later, Huffman still claims shadow bans are useful against "spam." Sadly, this exception for shadow banning turns out to be a gaping hole. When I look up a random Redditor, their removal history almost always reveals removed commentary. And, chances are, moderators did not notify them of the removal.

Reddit and Facebook both allow volunteer moderators to shadow ban other users, yet the Electronic Frontier Foundation gave them stars for "providing meaningful notice to users of every content takedown and account suspension."

Further, Reddit inspired Facebook's use of shadow bans, according to former Reddit CTO Jeremy Edberg:

Other platforms like YouTube, Twitter/X and TikTok have followed suit. Thus, shadow banning was popularized on today's platforms by Huffman's implementation on Reddit.

In 2018, the EFF appeared to lead an effort to hold platforms accountable called the Santa Clara Principles. But these principles do not require platforms to notify users when content "amounts to spam." Even Europe's Digital Services Act, which purports to ensure a "transparent online environment," permits shadow banning "bots" and "fake accounts." And, the Washington Post's coverage of shadow banning does not dispute the idea that shadow bans help "tamp down spam."

But not only do shadow bans not fool bots, they also do not fool the real users who are repeat offenders. One only needs to search r/ModSupport for "ban evader" to reveal near daily posts from moderators requesting additional support from Reddit. Shadow bans are only effective against good-faith users, and they are only used by moderators who believe that secretly removing content is ethical. Thus, ironically, shadow bans end up empowering bots and ban evaders.

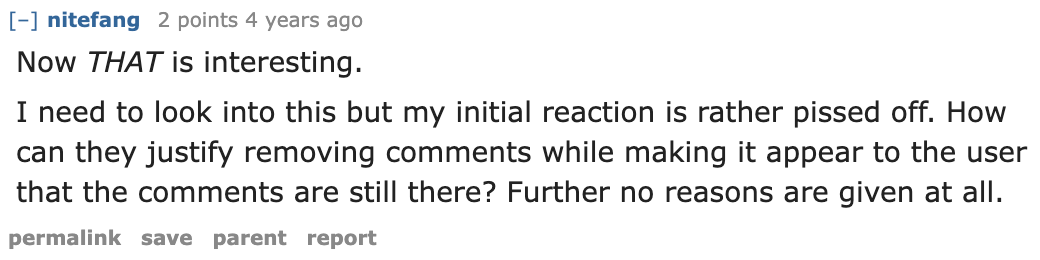

Moderators themselves rarely use the publicly understood term "shadow ban." Instead, they claim to "quietly remove" content, as one Reddit admin put it here:

Moderators may also argue for exceptions. One responded to Huffman's comment:

Some time later, Huffman modified his original comment to remove "or moderators," so that it now read:

With this edit, Huffman allowed volunteer moderators to continue shadow banning users. That is a massive problem. Ordinarily, a company would have to hire more staff to perform such work–even the best AI will never outmatch a human's ability to determine the meaning of comments. AI is, after all, trained on human-generated data. So, a platform's ability to manipulate discourse by allowing its own employees to shadow ban is limited. But when platforms and civil society permit secrecy by volunteers, increased use of shadow bans do not result in increased costs. Without pushback from society, instances of shadow banning thus become unlimited.

Twitter's former head of Trust and Safety, Yoel Roth, has claimed that X cannot disclose the true status of its shadow banned users without first categorizing the reasons for the shadow bans. But this argument is a straw man. It ignores the fact that platforms can (and should) notify users about bans even when reasons for bans are unavailable. Presumably Roth, upon reviewing those reasons, would continue to shadow ban certain users. If so, he still promotes society's "exceptional" use of shadow bans where its exercise is, in fact, unlimited.

Some Reddit moderators also claim that mere notification is too laborious and thus impractical. There is even one content moderation researcher who admits to "shadow ban[ning] people on a regular basis." There are likely more researchers who think similarly, given that Reddit moderation is heavily researched by several of r/science's 1,500+ moderators.

Despite what many content moderators say, society is better off without shadow bans. That way, everyone can see the consequences of their actions. Those who claim that shadow bans fool bots are just inventing another straw man. Thus, civil society must demand that platforms renounce their use of such deceptive tooling. Eradicating shadow bans will advance users' freedom to communicate without hampering platforms' ability to address bot-generated spam.

![[Removed]: The Real Censorship is Secret Suppression](https://substackcdn.com/image/fetch/$s_!4iHc!,w_40,h_40,c_fill,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F13b73319-822c-48da-a9ac-1674f4c9a722_127x127.png)

![[Removed]: The Real Censorship is Secret Suppression](https://substackcdn.com/image/fetch/$s_!1X_o!,e_trim:10:white/e_trim:10:transparent/h_72,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F4e3bd729-0bba-433a-b935-5a4b52a1ea7b_1278x337.png)